Image created by AI

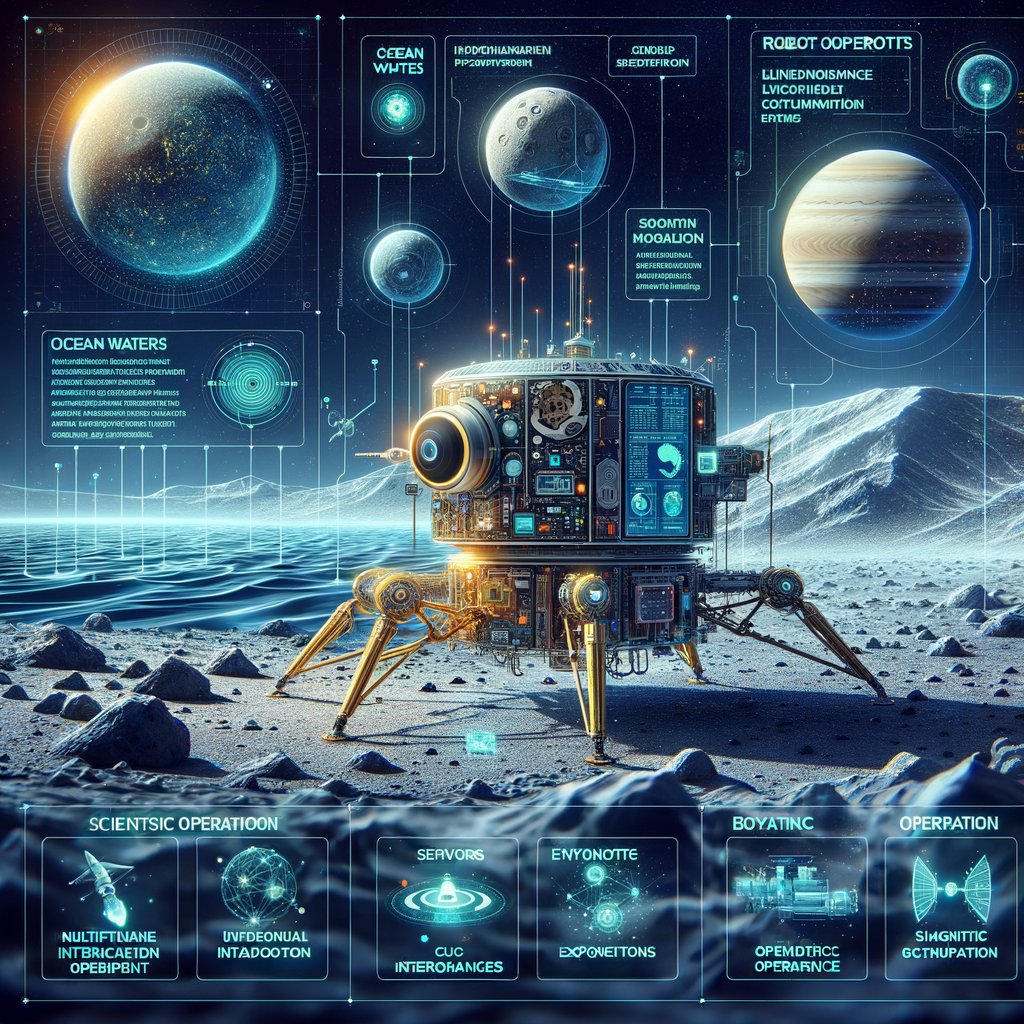

NASA's Bold Leap Towards Autonomous Missions on Icy Ocean Worlds

In the quest to uncover life existing far beyond our homely confines, NASA has committed to exploring the enigmatic ocean worlds within our solar system—specifically Jupiter's moon Europa and Saturn's moon Enceladus. Recognizing these moons as promising harbors for life due to their sub-surface oceans, NASA’s initiative utilizes groundbreaking technology to simulate and prepare for the extraordinary circumstances these missions will encounter.

Launched in 2018, NASA's program leverages soaring advancements in technologies under the ambit of Artificial Intelligence (AI)—from machine learning to causal reasoning. These AI advancements are crucial due to substantial communication delays between Earth and the distant moons, coupled with harsh conditions and the constraint of battery life on the robotic landers.

Central to these preparatory strides are two significant testbeds: the physical Ocean Worlds Lander Autonomy Testbed (OWLAT) and the virtual Ocean Worlds Autonomy Testbed for Exploration, Research, and Simulation (OceanWATERS), both developed to refine the autonomous capabilities of spacecraft. While OWLAT is situated at NASA's Jet Propulsion Laboratory in Southern California, OceanWATERS finds its home at NASA’s Ames Research Center in Silicon Valley.

OWLAT is meticulously designed to mimic a spacecraft lander equipped with a robotic arm for conducting science operations in low-gravity environments, typical of ocean-bearing moons. It features a Stewart platform with six degrees of freedom to simulate lander motions, sensors to measure interaction forces, and a comprehensive suite of tools for conducting science operations.

In contrast, OceanWATERS provides a high-fidelity simulation environment, utilizing the Dynamics And Real-Time Simulation (DARTS) physics engine. This software-only platform offers a visual and telemetry-responsive simulation replicating OWLAT’s physical behaviors, thereby ensuring any developed autonomy could be tested without the overheads of physical deployment.

Both systems employ a Robot Operating System (ROS)-based interface, allowing operations to be controlled and monitored for safety and performance meticulously, protecting the systems within operational bounds while flagging any anomalies potentially indicative of mission-threatening conditions.

The significance of such autonomous capabilities boils down to the need for robotic landers to operate independently, due to up to an hour-long delay in communication from Earth. This level of autonomy is crucial for making real-time decisions during the surface operations, such as sample collection, analyzing site suitability, and managing unexpected equipment failures—all while power management remains a critical ongoing concern.

Given these developments, by 2021, NASA had engaged six research teams across the United States to test and enhance these technologies through their Autonomous Robotics Research for Ocean Worlds (ARROW) and Concepts for Ocean worlds Life Detection Technology (COLDTech) programs. Their collective efforts have culminated in robust technology ready to potentially revolutionize how we conduct planetary missions, making the dream of touching down on these distant icy realms one step closer to reality.